Optimizing lambda coldstarts

You thought you could just set some flags and your node.js lambda function would be bundled, tree-shaken, minified and go from ice cold to glowing hot in milliseconds? My sweet summer child. I thought that too, but then I analyzed the bundle, pored over some traces and realized how wrong I was. What follows is the rabbit hole I went down to optimize my lambda coldstarts.

How it started

I had an api with a 3.4M zipped bundle that was using require to import dependencies and a 1567 ms coldstart. Loading the secrets I needed for every request added 375 ms. Most invocations were coldstarts due to low traffic and before any processing happened there was 1974 ms of waiting. Dang. Before you tell me I can use provisioned concurrency, I know; I was more interested in what was making my coldstarts slow. This is what my package.json looked like:

"dependencies": {

"@aws-lambda-powertools/logger": "^1.12.1", //powertools packages

"@aws-lambda-powertools/metrics": "^1.12.1",

"@aws-lambda-powertools/tracer": "^1.12.1",

"axios": "^1.1.0", //for http requests

"hash36": "^1.0.0",

"sha256-uint8array": "^0.10.3",

"jose": "^4.6.0", //for jwt

"lambda-api": "^1.0", //for the api

"@aws-sdk/client-dynamodb": "^3.131.0", //for interacting with AWS

"@aws-sdk/client-iam": "^3.131.0",

"@aws-sdk/client-secrets-manager": "^3.131.0",

"@aws-sdk/client-sts": "^3.131.0",

"@aws-sdk/lib-dynamodb": "^3.131.0"

}

Note

These benchmarks were done using node.js 18, with 256MB of memory running on arm64.

Knocking down the size with a bundler

The first step was to switch to static imports and use a bundler.

Before:

const { Logger } = require('@aws-lambda-powertools/logger');

After:

import { Logger } from '@aws-lambda-powertools/logger';

For a bundler, I chose esbuild over webpack because the first rule of doing something fast is to never use something slow. Benchmarking build times, esbuild took less than a second and webpack took 29 seconds. I am using the serverless framework to build and deploy my lambda, so I added the serverless-esbuild plugin.

Here's how I added it:

npm install -D serverless-esbuild esbuild

Then I added the following to my serverless.yml:

plugins:

- serverless-esbuild

custom:

esbuild:

bundle: true

minify: true

What's crazy about esbuild is that without bundling the build was 4 seconds and with bundling it was 1 second, esbuild made it faster!

Before:

> time sls package

Packaging speedrun-federation for stage dev (us-west-2)

✔ Service packaged (4s)

sls package 5.39s user 1.11s system 116% cpu 5.561 total

After:

> time sls package

Packaging speedrun-federation for stage dev (us-west-2)

✔ Service packaged (0s)

sls package 1.65s user 0.34s system 149% cpu 1.333 total

Note

If you are using the CDK to deploy, it uses esbuild under the hood so just pay attention to the flags I'm using. You can specify them using BundlingOptions in the NodeJSFunction constructor but as @dreamorosi points out, it chokes on plugins. If you need plugins, which you only need if you use sourcemaps, you may need to use this instead.

The results were that my zip file went from 3.4 MB to 425K and my coldstart went from 1567 ms to 591 ms. My secrets loading stayed steady at 375 ms. That's a 87.5% reduction in size and a 62.8% reduction in coldstart time. Not bad for a few minutes of work.

Fixing useless stacktraces

But minification has its downsides, I couldn't figure out anything useful from the minified stacktraces. Sourcemaps fix this, so I added the following to my serverless.yml:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

I also set the corresponding node environment variable so Node 18 would use them:

environment:

NODE_OPTIONS: '--enable-source-maps'

This ballooned the zip file from 425K to 1.5 MB, raised my coldstart to 650 ms and the loading of my secrets to 435 ms. I was better off than baseline, but I wanted to see if I could do better.

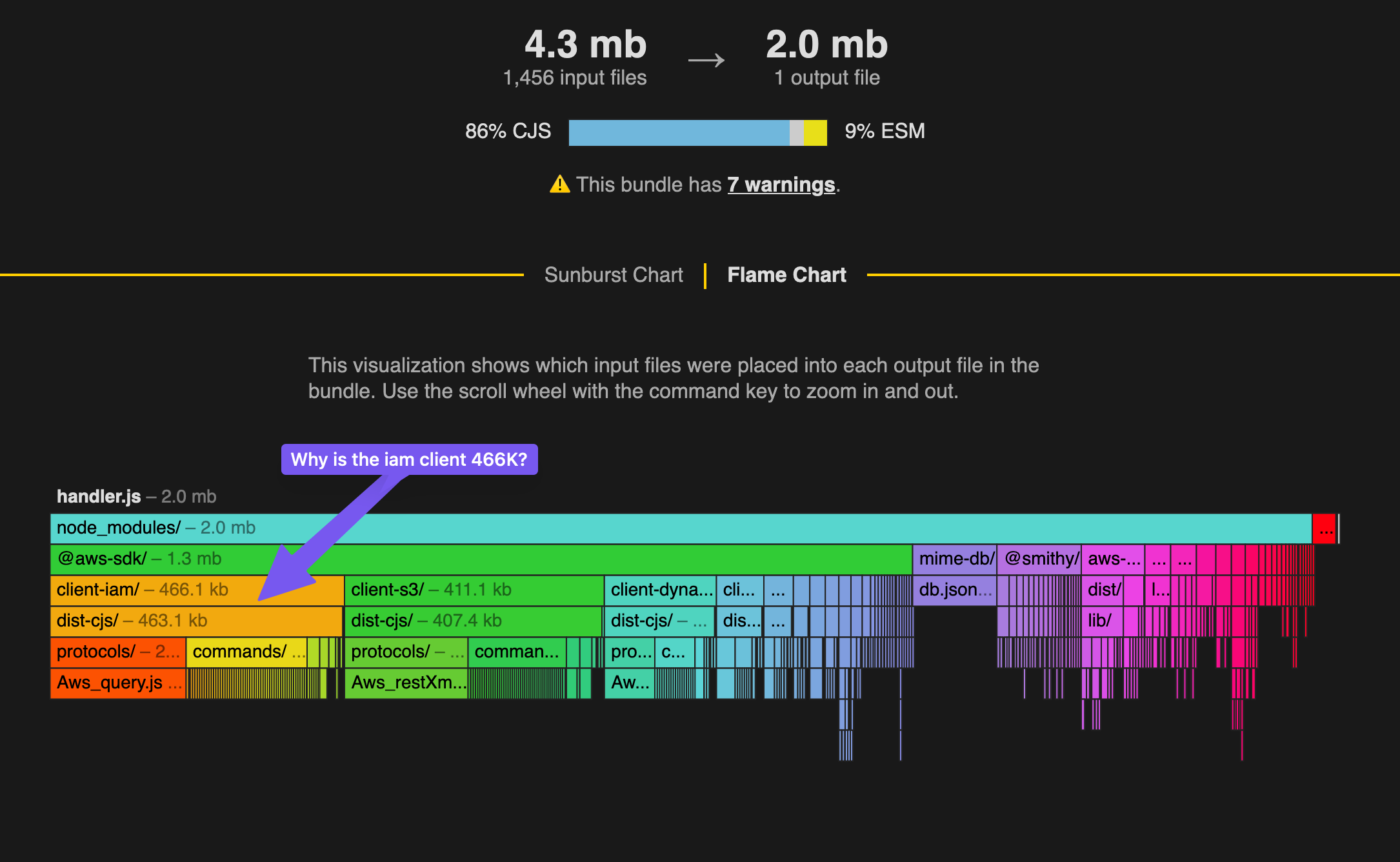

Analyzing the bundle

esbuild lets you analyze the bundle it creates by specifying the metafile flag. There is a nice analyzer on the website to visualize it. The analyzer is incredibly useful in determining what is contributing to bundle size and the reason it was included. I added the following config to my serverless.yml:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

metafile: true

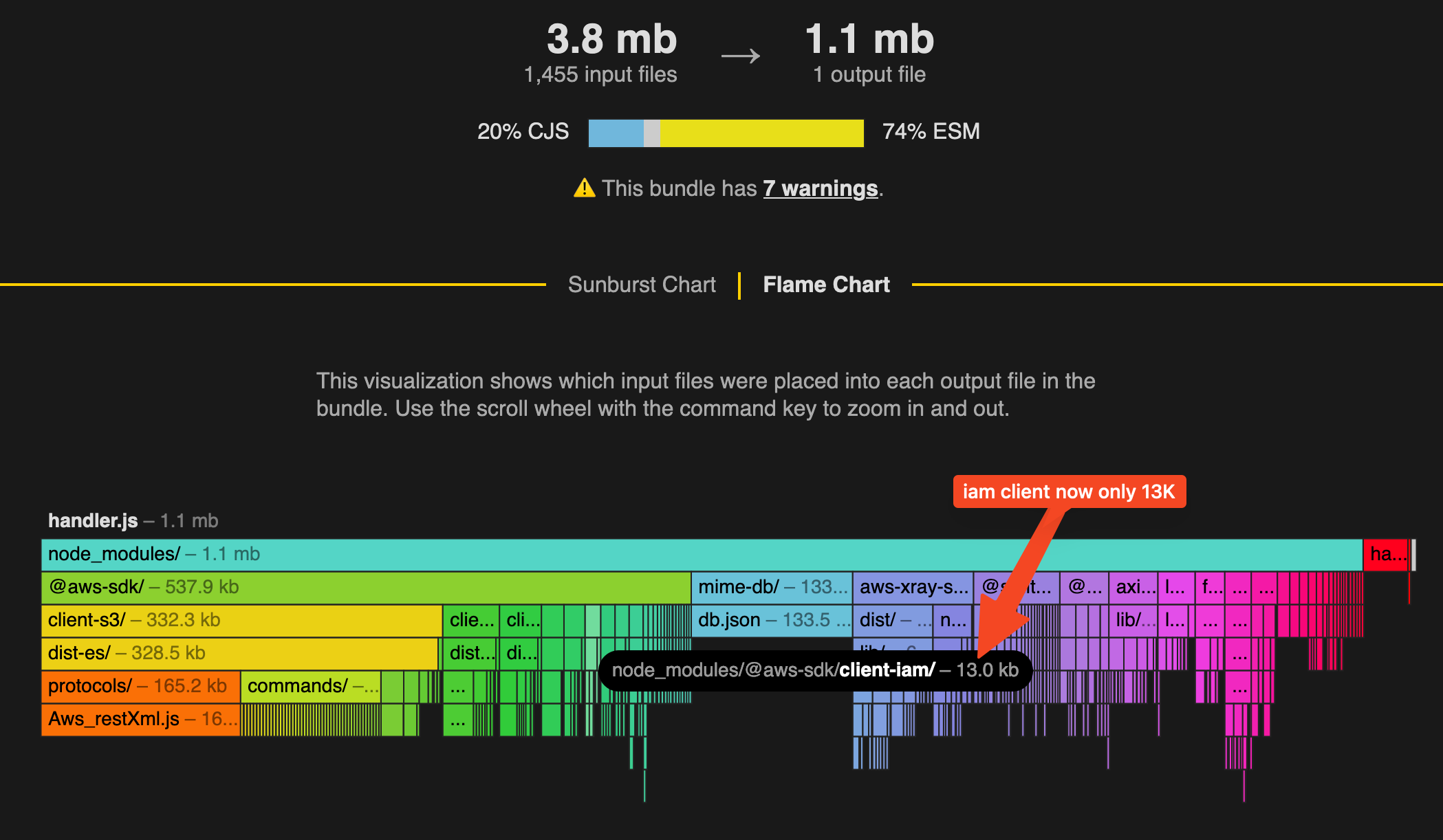

Looking at the output, it was clear the @aws-sdk packages were the biggest offenders and tree shaking wasn't working properly. I found this GitHub Issue that explained how to fix it. I added the following to my serverless.yml:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

metafile: true

treeShaking: true

mainFields:

- 'module'

- 'main'

This enabled treeshaking. The iam-client went from 466K to 13K.

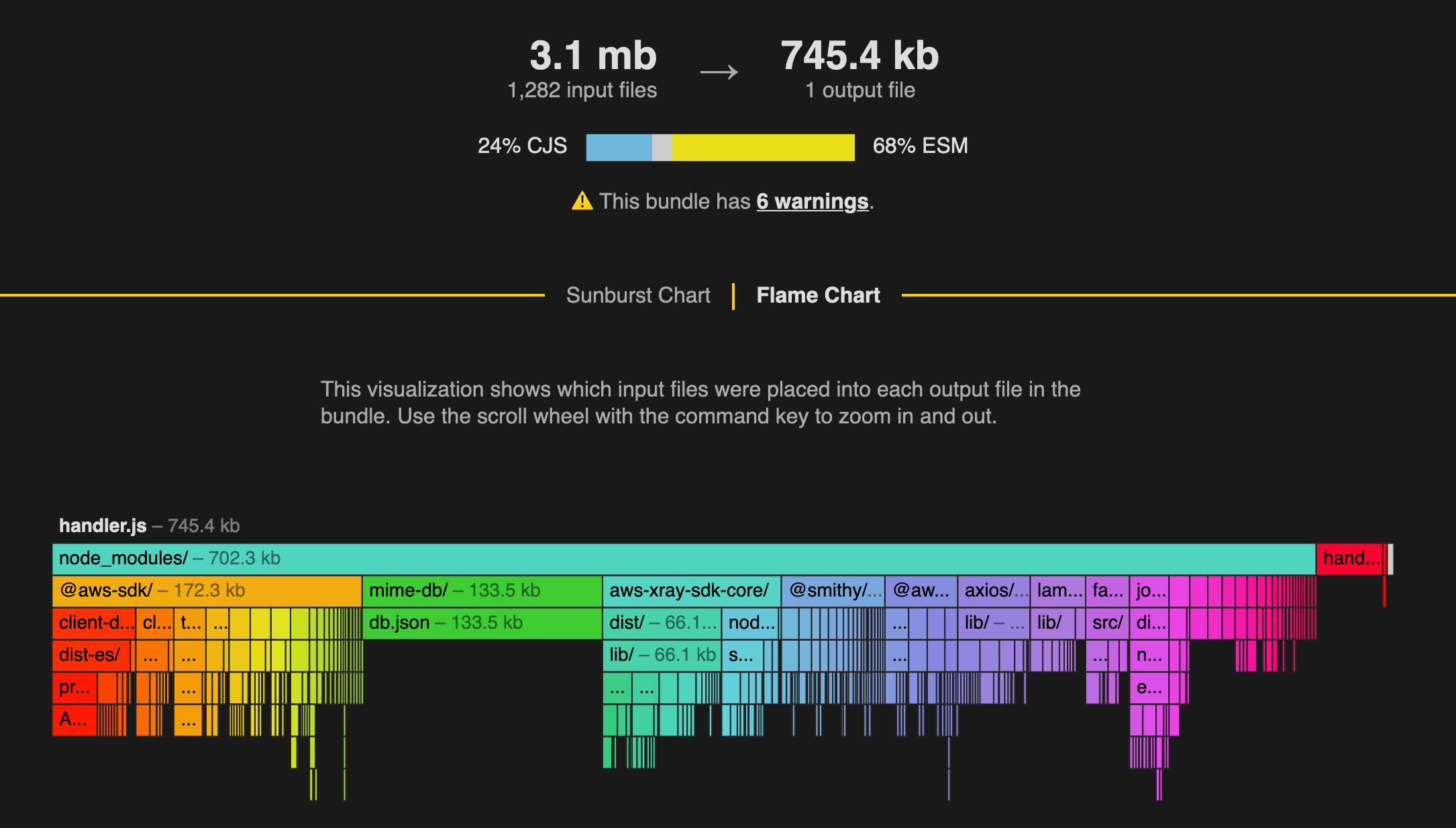

But I noticed a few other things in there, the @aws-sdk/client-s3 remained and neither it or the aws-xray-sdk-core package was getting tree-shaken. @aws-sdk/client-s3 was a dependency of the lambda-api package and wasn't actually used in my code, so I removed it. The aws-xray-sdk-node doesn't have the necessary ESM build for tree shaking. I could have pulled it in using the lambda layer for AWS PowerTools, but I left it for now. This was how I excluded the @aws-sdk/client-s3 package from bundling, you can do something similar if you want to omit the other @aws-sdk packages and use the versions that exist in the lambda runtime:

Danger

Removing @aws-sdk packages like this is risky, because they must be lazy loaded and unused. If either of those aren't true, the on disk version will be loaded and that is super slow. To confirm that you are not using anything from disk, test your code using node 16 which only has the aws-sdk v2 baked into it. I had to submit a pull request to lambda-api to meet these requirements.

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

metafile: true

treeShaking: true

mainFields:

- 'module'

- 'main'

exclude:

- '@aws-sdk/client-s3'

I removed the metafile: true from my serverless.yml file and rebuilt.

Cool, the bundle went from 2.0 MB -> 745K uncompressed. Compressed, the bundle was now 955K with sourcemaps. Coldstart was 649ms and loading secrets was 435ms.

Removing sources content and json from sourcemaps

Adding the sourcemap was a major contributor to the bloat of the bundle. Using the source map visualizer I noticed that the sourcemaps were including the entire source. I really just needed method names and line numbers in my stacktraces. I added the following to my serverless.yml:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

treeShaking: true

mainFields:

- 'module'

- 'main'

exclude:

- '@aws-sdk/client-s3'

keepNames: true

sourcesContent: false

That brought the compressed bundle down from 955K->935k. Digging in further, I found the sourcemap contained the details for everything in node_modules. I mainly care about my own code, so I started looking into omitting the node_modules from the sourcemap. I found this GitHub Issue and created a plugin as suggested. When I ran it, I discovered the sourcemap was also including json files, so I omitted those, and for good measure, reduced the size of the mime-db json to only the mime type I needed. I also specified using the copy loader on files needed by the aws-xray-sdk-core package. Using the copy loader omitted them from the sourcemap and didn't result in json errors due to the comments not being allowed. This is what the final plugin looked like:

const fs = require('fs');

let excludeVendorFromSourceMap = {

name: 'excludeVendorFromSourceMap',

setup(build) {

const sourceMapExcludeSuffix =

'\n//# sourceMappingURL=data:application/json;base64,eyJ2ZXJzaW9uIjozLCJzb3VyY2VzIjpbIiJdLCJtYXBwaW5ncyI6IkEifQ==';

//ignore source map for vendor files

build.onLoad({ filter: /node_modules\/.*\.m?js$/ }, (args) => {

return {

contents: fs.readFileSync(args.path, 'utf8') + sourceMapExcludeSuffix,

loader: 'default',

};

});

//for .json files

build.onLoad({ filter: /node_modules.*\.json$/ }, (args) => {

// if it is the mime-db, replace the content with just the mime type we need

var json = args.path.includes('/mime-db/db.json')

? `{"application/json":{"source":"iana","charset":"UTF-8","compressible":true,"extensions":["json","map"]}}`

: fs.readFileSync(args.path, 'utf8');

var js = `export default ${json}${sourceMapExcludeSuffix}`;

// if it is in the /resources folder, use copy instead of trying to ignore it, it is required by

// aws-xray-sdk-core

return args.path.includes('/resources')

? { contents: json, loader: 'copy' }

: { contents: js, loader: 'js' };

});

},

};

module.exports = [excludeVendorFromSourceMap];

And the corresponding serverless.yml to use it:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

treeShaking: true

mainFields:

- 'module'

- 'main'

exclude:

- '@aws-sdk/client-s3'

keepNames: true

sourcesContent: false

plugins: plugins/excludeVendorFromSourceMap.js

This reduced the bundle down from 935K to 223K. The coldstart was 650 ms and secrets loading was 345 ms or 995 total.

Using the bigger CPU of initialization

I learned recently that during initialization, Lambda allocates more CPU and memory to the function. So one final thing I wanted to do was to move the secrets loading to initialization and see if more CPU would help the SSL connection setup go faster. To do this, I needed to use TLA (top level await). This required switching to ESM, adding a banner to support require and changing the output file extension to .mjs. It also required patching the serverless framework to support .mjs. This was my final serverless.yml:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

treeShaking: true

mainFields:

- 'module'

- 'main'

exclude:

- '@aws-sdk/client-s3'

keepNames: true

sourcesContent: false

plugins: plugins/excludeVendorFromSourceMap.js

format: esm

outputFileExtension: .mjs

banner:

js: import { createRequire } from 'module';const require = (await import('node:module')).createRequire(import.meta.url);const __filename = (await import('node:url')).fileURLToPath(import.meta.url);const __dirname = (await import('node:path')).dirname(__filename);

In the end, my bundle went from 3.4M to 223K (14.2x reduction) my cold starts went from 1974ms to 892 ms (2.2x reduction).

The biggest speedup was adding bundling. Adding sourcemaps actually caused a regression that was only countered by the final optimization.

The biggest reduction in size was adding bundling. Adding sourcemaps caused a regression but that was countered by the other optimizations to enable tree shaking and removal of unused sourcemap content.

What's next

I think I'm going to replace the axios library with something like wretch. What I'm doing to reduce the size of the mime types json is fairly sketchy and jumping to wretch obviates this. I'll also keep watching the aws-xray-sdk-core package to see if they add an ESM build and I might experiment with what using the lambda layer for AWS PowerTools does to my coldstarts. I suspect it will be a wash, but I'm curious. Finally, I may try priming the aws clients in the initialization code to see what that does.

The gold is in the comments

Update 9/27/2023: After tweeting this , there was some buzz in the twitter comments and the rabbit hole got deeper.

Using the lambda layer for AWS PowerTools

I hadn't yet tested the Lambda layer for PowerTools, obviously it would shrink the bundle size but I wanted to know if it impacted coldstarts. I added the following to my serverless.yml:

custom:

esbuild:

bundle: true

minify: true

sourcemap: true

treeShaking: true

mainFields:

- 'module'

- 'main'

keepNames: true

sourcesContent: false

plugins: plugins/excludeVendorFromSourceMap.js

format: esm

outputFileExtension: .mjs

banner:

js: import { createRequire } from 'module';const require = (await import('node:module')).createRequire(import.meta.url);const __filename = (await import('node:url')).fileURLToPath(import.meta.url);const __dirname = (await import('node:path')).dirname(__filename);

exclude:

- '@aws-sdk/client-s3'

- '@aws-lambda-powertools'

- 'aws-xray-sdk-core'

functions:

speedrun-federation:

layers:

- !Sub 'arn:aws:lambda:${AWS::Region}:094274105915:layer:AWSLambdaPowertoolsTypeScript:18'

| Init without secrets | lambda layer | delta |

|---|---|---|

| 728 | none (esbuild) | +0 ms |

| 922 | v18 | +194 ms |

| 882 | v20 | +154 ms |

| 894 | v21 | +166 ms |

It was +171 ms on average, so I ripped layers out and followed up with the PowerTools team.

Switching to redaxios

@munawwarfiroz suggested that I use redaxios instead of axios.

Yet another thing to try: lazy load any heavy modules.https://t.co/tmOjFxsxEJ

— Munawwar Firoz (@munawwarfiroz) September 26, 2023

I am wondering what if you don't minify, just bundle into one file (but still sourcemap on, for file names)

Also check redaxios

I was already planning on switching to wretch, but this was a lightweight drop-in replacement for axios.

npm i redaxios

npm uninstall axios

-import axios from 'axios';

+import axios from 'redaxios';

This reduced the bundle size 25K from 223K to 198K, and correcting for secrets manager the coldstart was -7 ms to +25 ms. I chose to stick with it, the margin is lost in the noise.

Revisiting source maps

@thdxr mentioned that sourcemaps added a performance penalty.

fyi that source maps option has significant performance problems

— Dax (@thdxr) September 26, 2023

i don’t really know why but there’s some deeply buried nodejs issues around it

would not recommend

I'd actually seen his comment here when I was first exploring sourcemaps. It appears that a fix landed in node.js 18.8 in this issue #41541. As of this writing, Lambda is using 18.12. I tried to confirm it in this benchmark but they never tested with node.js 18.8. Regardless of whether it's fixed or not, it can't have a penalty if it's not there right? What if we turn off minify and sourcemaps? That will make the bundle bigger, but I'll have stacktraces for everything including the packages I depend on. With treeshaking, my final bundled code fits within the limits of the Lambda console so I can easily go to the line number. Let's do this! Here's the final serverless.yml:

custom:

esbuild:

bundle: true

# minify: true

# sourcemap: true

treeShaking: true

mainFields:

- 'module'

- 'main'

keepNames: true

# sourcesContent: false

plugins: plugins/excludeVendorFromSourceMap.js

format: esm

outputFileExtension: .mjs

banner:

js: import { createRequire } from 'module';const require = (await import('node:module')).createRequire(import.meta.url);const __filename = (await import('node:url')).fileURLToPath(import.meta.url);const __dirname = (await import('node:path')).dirname(__filename);

exclude:

- '@aws-sdk/client-s3'

NODE_OPTIONS: '--enable-source-maps' environment variable.

Note

I attempted to remove the plugin too and use:

loader:

'.json':'copy'

✖ TypeError [ERR_IMPORT_ASSERTION_TYPE_MISSING]: Module "file:///Users/david/Documents/code/speedrun-api/.esbuild/.build/partitions-SKMMJENC.json" needs an import assertion of type "json" If you can't use plugins, just remove it completely and don't add the loader line.

This one felt fast to me when I tested it. Turns out it was. The bundle grew 87k from 198k to 285k, but the coldstart dropped 85 ms from 898 to 813 ms. The exhilaration was fleeting, I ran a few more coldstarts and saw 904 ms, 873 ms and 863 ms. But still, 30ms faster than my previous best on average. Note that these tests were run without correcting for secrets loading latency which is +/-20ms. This is just out of that margin and gives better stack traces so I kept it.

For a final test, I tried throwing exceptions with sourcemaps on and off. There still appears to be a penalty for the sourcemaps even with the fix put in node.js 18.8. I was seeing an additional 45 ms or so with sourcemaps on on warmed instances. That settles it, I'll leave sourcemaps off!

That's weird why is SSO bundled in there?

Poking around the bundle some more, I noticed that the @aws-sdk/client-sso package was in there for loading credentials. If you know anything about credentials in Lambda, credentials are injected via environment variables. There is no end user interaction so it can't possibly get credentials using SSO. Since SSO adds 39 ms when minified to the coldstart if you use any AWS v3 client, it's surprising there is no way to omit it. I opened this issue with some code on how to patch the default credentials provider if you don't need it. It's unfortunate the SDK team didn't pursue this.

Further reading

I found Robert Slootjes' article about optimization after I had independently done something very similar. He cuts right to the final configuration, but doesn't go as deep as me.

Yan Cui wrote All you need to know about lambda cold starts, he concludes bundling and treeshaking are the most important optimizations.

AJ Stuyvenberg has a few coldstart benchmarks comparing the AWS V2 and V3 javascript SDKs and the difference bundling makes.

This AWS blog article talks about Using ESM and TLA (top level await) with Lambda which is how I used the higher compute power of initialization to speed up my secrets loading.

Finally, cost optimization is the other dimension for optimization in Lambda. This Serverlessland article about Cost Optimization describes a few approaches to reduce your costs.