Bifurcating Lambda Logs

It is common to emit two log types; application logs and request logs from a service application. In EC2, ECS and Fargate these logs can be pumped to separate CloudWatch Log Groups using the CloudWatch Agent, FireLens and FluentBit. Unfortunately in Lambda, these logs can only go to one log group. In this post, I explore a method for pumping request logs to a separate log group to workaround this Lambda limitation.

Why

If you've read my post on Logging for scale, you'll know there are two log types. Application logs which are emitted many times per request and request logs which are emitted once per request. Application logs are verbose and used to trace a request through your application. Request logs are information dense and summarize the request. By keeping them separate, you can set different retention polices for cost savings and improve query speeds. I care most about request logs. An application logs' value fades with time, so let's aim for the middle here. We'll write application logs and request logs to one Log Group with a short retention. Then we'll stream just the request logs to a second Log Group with longer retention.

How

I wasn't able to find a no-code way of bifurcating logs in Lambda. Amazon Data Firehose doesn't support routing to CloudWatch Logs as a sink and the effort to support FluentBit in Lambda died on the vine. Arguably using the Lambda Telemetry API to send application logs directly to CloudWatch is the best approach, but I was looking for something with less effort. I turned my attention to using a CloudWatch Subscription Filter to route request logs to a 2nd Lambda that writes them to a new Log Group.

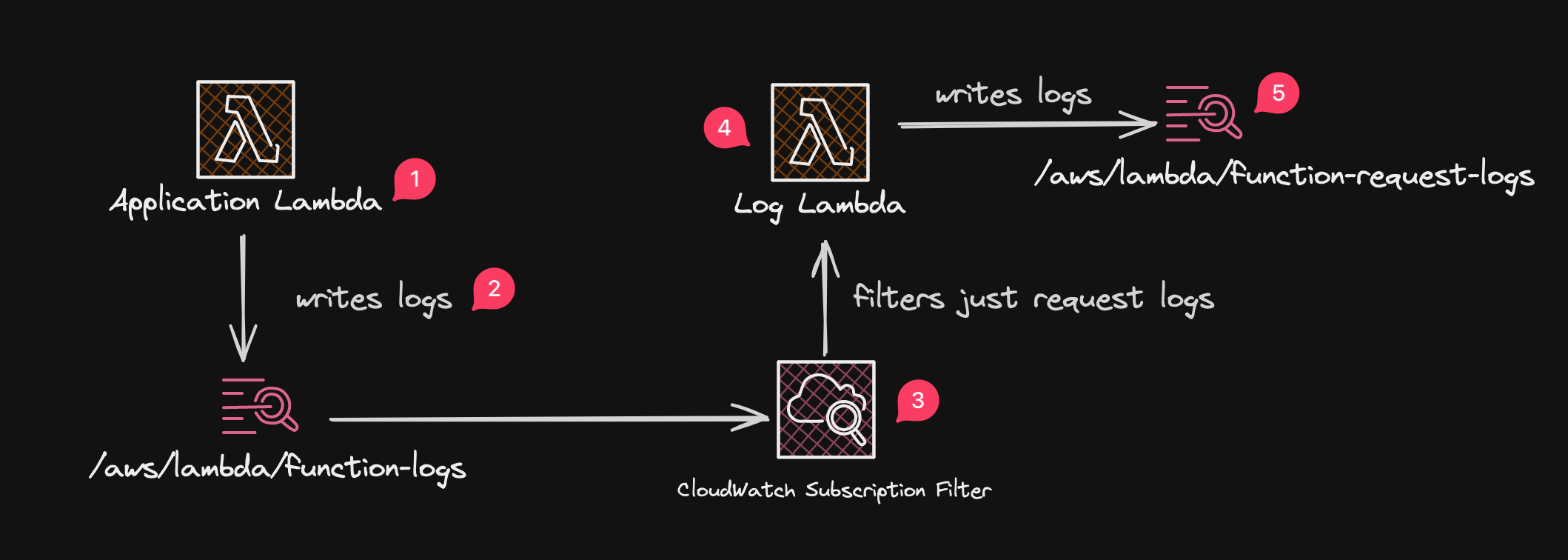

Here's the architecture:

Logs in (1) and (2) are emitted by the application Lambda function. They include both application and request logs. The CloudWatch Subscription Filter (3) routes request logs to the logs Lambda (4) which does little more than write them to its own log file (5).

Implementation

The code for this post is in this GitHub repository

I implemented a simple Lambda function url using the Lambda PowerTools Metrics and Logger to emit both application and request logs. The application logs have content like Starting booking and the request logs have a fault metric and some metadata about the request.

{

"cold_start": true,

"function_arn": "arn:aws:lambda:us-east-2:3208XXXXXXXX:function:airline-service:prod",

"function_memory_size": 128,

"function_name": "airline-service",

"function_request_id": "44a6d320-9be4-4c2e-873f-afcd7fe04d66",

"level": "INFO",

"message": "Starting booking",

"service": "bookings",

"timestamp": "2024-02-27T18:07:15.668Z",

"xray_trace_id": "1-65de24d3-7f9389073be6a65a435b0cf0"

}

{

"_aws": {

"Timestamp": 1709057235720,

"CloudWatchMetrics": [

{

"Namespace": "serverlessAirline",

"Dimensions": [

[

"service"

]

],

"Metrics": [

{

"Name": "fault",

"Unit": "Count"

}

]

}

]

},

"requestId": "44a6d320-9be4-4c2e-873f-afcd7fe04d66",

"service": "bookings",

"fault": 0,

"path": "/",

"ip": "97.XXX.XXX.XXX",

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:123.0) Gecko/20100101 Firefox/123.0",

"codeVersion": "5"

}

Here is my simple handler that emits application and request logs:

| handler.ts | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | |

I created a CDK stack that uses a CloudWatch Logs Subscription filter to route the request logs (the ones with "_aws" in them) to a second Lambda.

Why not use Infrequent Access?

I originally wanted to use the Infrequent Access class on the second log group, but ultimately went with standard access (Line 24 below). I found that when you query infrequent access logs with CloudWatch Insights, you can't query using json fields! A simple filter fault=1 becomes this:

parse @message /"fault":(?<fault>\d)/ |

filter ispresent(fault) and fault = 1

| logfilter-stack.ts | |

|---|---|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 | |

I implemented a second Lambda function that writes the request logs prefixed by timestamp. Since I don't want the metrics to be double-published, I remove them before writing the log 1. In the CDK code, note how I set the logFormat to JSON and set systemLogLevel to WARN on lines 49-50. This is so I don't get the Lambda telemetry details like REPORT lines in the logs.

use aws_lambda_events::event::cloudwatch_logs::LogsEvent;

use chrono::NaiveDateTime;

use lambda_runtime::{run, service_fn, Error, LambdaEvent};

use serde_json::Value;

async fn function_handler(event: LambdaEvent<LogsEvent>) -> Result<(), Error> {

//For each log event

for record in event.payload.aws_logs.data.log_events {

// Parse as JSON

let mut json: Value = serde_json::from_str(&record.message).unwrap();

// Rip out _aws.CloudWatchMetrics, we don't want to publish metrics again

json.as_object_mut()

.unwrap()

.get_mut("_aws")

.unwrap()

.as_object_mut()

.unwrap()

.remove("CloudWatchMetrics");

// Print log entry to stdout prefixed by original timestamp in ISO8601 format

println!(

"{} {}",

NaiveDateTime::from_timestamp_millis(record.timestamp)

.unwrap()

.format("%Y-%m-%dT%H:%M:%S%.3fZ")

.to_string(),

json

);

}

Ok(())

}

#[tokio::main]

async fn main() -> Result<(), Error> {

run(service_fn(function_handler)).await

}

The result

I got really close to what I wanted, but fell short. The logs in the second log group don't maintain the timestamp of when they were originally published. Prefixing the log lines with the original timestamp when writing them doesn't fix this, Lambda appears to use the timestamp of when the log was written to STDOUT as the timestamp when publishing. I found a workaround, but it isn't ideal. To bin by original timestamp I can use a CloudWatch Insights query like this:

fields fromMillis(`_aws.Timestamp`) as @t

| stats sum(fault) by datefloor(@t, 1m)

Due to these shortcomings, I didn't create a cost savings calculator spreadsheet or do the math to see at what ratios of application logs to request logs I would save money. I still may revisit this in the future and implement bifurcating logs to CloudWatch as a Telemetry API extension.

Conclusion

If you are ok with doing extra work to handle timestamps, you can bifurcate lambda logs using a CloudWatch Subscription Filter to route a subset of logs to a second Lambda. If not you probably need to roll your own Telemetry API extension. If you have any better ideas or comments I'd love to hear them on Twitter.

Further reading

- Details about the different CloudWatch Logs Types

- The Lambda Telemetry API

- If I was implementing this as a Telemetry API extension, I'd probably use this crate

-

If I was using infrequent access logs I wouldn't need to do this. ↩