Migrating to LLRT

With each release of LLRT (Low Latency Runtime for Lambda), I've tried to use it to run my production API and failed. Recently I took a different approach and navigated the last few hurdles to migrate my API from Node 22 to LLRT. In this post, I'll share how I did it, the challenges I faced, and some benchmarks.

What is LLRT?

LLRT is a newer experimental runtime for AWS Lambda that is designed to run JavaScript applications with better startup performance and at a lower cost than Node.js. It is built in Rust using the QuickJS JavaScript engine and has several native optimizations to improve the performance of Lambda functions that interact with AWS services. It landed with a lot of fanfare in October 2023 and it aims to have WinterCG compatibility. On paper this means that it should be able to run most JavaScript applications. In practice, you are likely to need to make modifications before your code runs on LLRT. With that said, any required modifications should be compatible with Node.js if you need to revert because LLRT doesn't pan out.

The Background

I've written several articles about optimizing my Lambda Node.js API. In my last article, I speculated that I had plateaued on improving the performance. To go further, I could rewrite my API in Rust or Go, or I could keep the code the same and use LLRT. I've made several attempts to try LLRT in the past but each time I tested it on my production code, I hit a new roadblock. It would either be missing an essential function or it would hang. I was able to successfully migrate basic apps, but never made it far with my API. After getting some traction on LLRT bugs related to Top Level Await and the IAM Client hanging, I decided to see how far I could get.

The Migration

My approach was to migrate each route in my API one by one. Attempting to do a lift and shift to LLRT didn't work and resulted in none of my APIs working. So, I started copying code over into a totally new project to see if I could get 1 API to work. You are likely to encounter some of the same challenges I faced, so I've briefly described what they were and how I solved them.

Challenge 1: The runtime hangs

I was instantiating AWS clients during initialization and the runtime would hang.

INIT_START Runtime Version: provided:al2023.v107 Runtime Version ARN: arn:aws:lambda:us-east-2::runtime:32b91443ff3693bfd023844d0cb7ae4edf3af83483e1435f1f4303b987a4ecf8

INIT_REPORT Init Duration: 9999.61 ms Phase: init Status: timeout

INIT_REPORT Init Duration: 3003.49 ms Phase: invoke Status: timeout

START RequestId: 08fb0f3a-277d-4a1b-8b9c-146d6ffc8acd Version: 24

2025-09-05T20:58:31.533Z 08fb0f3a-277d-4a1b-8b9c-146d6ffc8acd Task timed out after 3.01 seconds

END RequestId: 08fb0f3a-277d-4a1b-8b9c-146d6ffc8acd

REPORT RequestId: 08fb0f3a-277d-4a1b-8b9c-146d6ffc8acd Duration: 3006.09 ms Billed Duration: 3000 ms Memory Size: 512 MB Max Memory Used: 28 MB

It turned out these were due to bugs in the LLRT runtime with invoking AWS clients during initialization and with using AWS clients with global endpoints. Both of these issues were fixed in 0.7.0-beta after I cut issues for them.

If you're encountering hangs that don't occur in Node.js, your best bet is to create a repro and submit an issue. The repository owners are quite responsive.

Challenge 2: Swapping out jose

I use jose to mint and verify JWT tokens for authentication. Unfortunately, some of the crypto functionality jose relies on is not yet supported by LLRT. Attempting to use jose to mint tokens in LLRT results in the following error:

SyntaxError: Could not find export 'KeyObject' in module 'crypto'

To get around this, I needed a JWT library that only used SubtleCrypto. I discovered that hono the web application framework had such an implementation. In addition, lambda-api the framework I have been using is not very active anymore, so it was a good opportunity to swap. It turns out, switching from lambda-api to hono was easy. There was minor changes to middleware, context and returning responses and most of the code I had written could be reused. With this experiment, I proved I could do the first part of all my APIs which was authentication.

Tip

You can review LLRT's compatibility matrix here to get a sense of what libraries you might need to swap.

Challenge 3: Missing Request functionality.

LLRT has native implementations of some JavaScript APIs. This includes APIs like the fetch API and the crypto API. By natively implementing these APIS, the overhead of an HTTP request requires less resources and is faster. The specific piece that was missing for me was the ability to process HTTP forms because the formData method is not available on Request. Here's what the error looked like:

2025-10-09T22:56:46.433Z a2b604ad-0495-4cb1-b470-b634b08bde21 ERROR TypeError: not a function

at #cachedBody (/var/task/index.mjs:387:34)

at formData (/var/task/index.mjs:402:12)

at parseFormData (/var/task/index.mjs:59:34)

at parseBody (/var/task/index.mjs:54:26)

at parseBody (/var/task/index.mjs:370:58)

at <anonymous> (/var/task/index.mjs:1761:15)

at #dispatch (/var/task/index.mjs:839:39)

at fetch (/var/task/index.mjs:865:27)

at <anonymous> (/var/task/index.mjs:1529:34)

I just needed basic form functionality, so I wrote the following shim to handle form parsing. In this case c.req.text() is the body of the request:

Object.fromEntries(new URLSearchParams(await c.req.text()))

Challenge 4: 200 ms slower coldstarts

When I was porting my API over, I was running in the us-east-2 region. When I got it working to my satisfaction and I tried to run it in us-west-2, I noticed coldstarts were almost 200 ms slower. The code was the same, so what was going on? The issue was because I instantiate a bunch of AWS JavaScript clients outside of the handler to reuse them across requests and take advantage of the higher CPU during init. It turns out LLRT does something the AWS JavaScript SDK doesn't do. It attempts to prime the clients by making a request to the service endpoint on instantiation in parallel. This warms the SSL connection when you have the higher CPU during init so the first request is faster in your handler. The issue is that if you have a service with a global endpoint, that means it needs to make a roundtrip to the global endpoint. If the global endpoint is not geographically close, the latency of that roundtrip far outweighs the time saved by having the higher CPU during init. I disabled this behavior by setting the environment variable LLRT_SDK_CONNECTION_WARMUP=0

Tip

Read about the other runtime environment variables here

Challenge 5: A no downtime migration

Warning

Don't attempt to do this unless you have a test environment in a different account that you can practice on. Without it, you will miss something and have downtime. This is a great time to set one up if you don't already have one.

Upon successfully getting my API fully working. I needed to connect it to my domain without downtime. If you are using something like API Gateway or CloudFront, this is a straightforward process.

- Deploy the API to a Lambda with a new name, but with the same execution role, log group and environment variables.

- Point API Gateway or CloudFront to the new Lambda at a different path. In my case I had

https://speedrun-api-beta.us-west-2.nobackspacecrew.com/v1pointing at the Node 22 Lambda API, so I pointedhttps://speedrun-api-beta.us-west-2.nobackspacecrew.com/v2at the LLRT Lambda API. - Test the API thoroughly, including validating the metrics and logs coming out of it.

- Once satisfied, point the old path (

/v1) to the new Lambda. - Remove the old Lambda.

One thing that makes this easier is using the CDK (Cloud Development Kit). The CDK construct called LlrtFunction is a drop in replacement for the NodeJsFunction construct. While I was migrating my API to LLRT, I used both LlrtFunction and NodeJsFunction. When it would fail in LLRT, I'd revert to NodeJsFunction as a sanity check. I can easily comment out one to switch between them.

const fn = new LlrtFunction(this, 'lambda', {

functionName,

entry: 'lambda/index.ts',

role: lambdaRole,

handler: 'handler',

memorySize: 256,

llrtBinaryType: LlrtBinaryType.FULL_SDK,

architecture: lambda.Architecture.ARM_64,

bundling: {

minify: false,

define: {

"process.env.sdkVersion": JSON.stringify(

JSON.parse(

readFileSync(

"node_modules/@aws-sdk/client-sts/package.json"

).toString()

).version

),

"process.env.DOMAIN_NAME": JSON.stringify(config.domainName),

},

}

})

// const fn = new NodejsFunction(this, 'lambda', {

// functionName,

// entry: 'lambda/index.ts',

// role: lambdaRole,

// handler: 'handler',

// runtime: lambda.Runtime.NODEJS_22_X,

// memorySize: 1024,

// bundling: {

// bundleAwsSDK: true,

// format: OutputFormat.ESM,

// mainFields: ["module", "main"],

// minify: false,

// define: {

// "process.env.sdkVersion": JSON.stringify(

// JSON.parse(

// readFileSync(

// "node_modules/@aws-sdk/client-sts/package.json"

// ).toString()

// ).version

// ),

// "process.env.DOMAIN_NAME": JSON.stringify(config.domainName),

// },

// banner: "import { createRequire } from 'module';const require = createRequire(import.meta.url);",

// externalModules: [

// "@aws-sdk/client-sso",

// "@aws-sdk/client-sso-oidc",

// "@smithy/credential-provider-imds",

// "@aws-sdk/credential-provider-ini",

// "@aws-sdk/credential-provider-http",

// "@aws-sdk/credential-provider-process",

// "@aws-sdk/credential-provider-sso",

// "@aws-sdk/credential-provider-web-identity",

// "@aws-sdk/token-providers"

// ]

// }

// })

Tip

You'll notice I've set minify: false in my bundling config. I've never had good luck with sourcemaps and minified code. I don't think the small performance boost in parsing the code is worth it for LLRT.

Benchmarks

This effort wouldn't be worth it if it didn't improve my API performance so let's see how it performs. Here are a few comparisons from before and after. Note I'm using 512MB less memory with LLRT.

| Benchmark | Node.js | LLRT | Delta | Improvement |

|---|---|---|---|---|

| Init Duration | 382 ms | 155 ms | -227ms | 59% |

| Console Federation | 133ms | 95ms | -38ms | 29% |

| BatchGetItem of 3 Items from DynamoDB | 129ms | 55ms | -74ms | 57% |

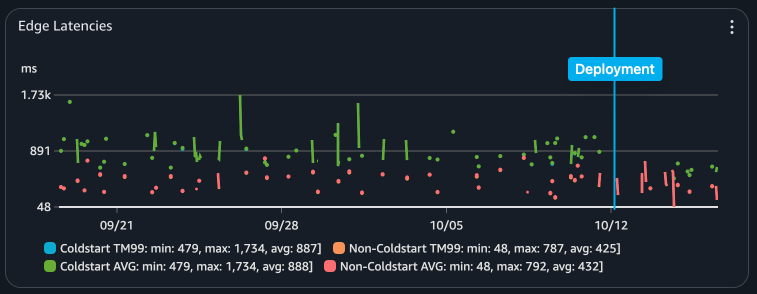

Finally, here is a graph of my E2E performance as measured from edge locations around the world. Although I had a slight issue with my metrics after deployment (coldstarts weren't reported separately), you can see that my max request time dropped from 1734 ms to 703 ms. Huge!

Conclusion

Migrating to LLRT was a success. It was a bunch of work, but I updated the web framework I was using, dropped a dependency and improved my API performance. If for some reason, LLRT lets me down in the future, I can easily switch back to Node.js. I hope this post inspires you to give LLRT a try and gives you the insight to make it through initial hurdles. Especially if your API is mainly glue code invoking AWS services (the sweet spot for LLRT). If you have questions, reach out to me on BlueSky.

Further Reading

- The LLRT Github Repository

- Masashi Tomooka's LLRT CDK Construct for simplifying deployment.

- Yan Cui's First impressions on LLRT with a few more insights into what makes it fast.